Oculus Quest took the virtual reality world by storm earlier this year, and quickly garnered $5 million in content sales over its first two weeks on the market. An official sales tally for the standalone VR device has not been publicized, but some analysts estimate that it could reach 1 million units by the end of this year. At our Connect show in London, developers were over the moon about Quest, labeling it the first “major stepping stone” towards mainstream adoption of VR. 2020 may prove to be an ever bigger step for VR, thanks to a spiffy new feature Facebook announced at Oculus Connect 6 today: controller-free hands tracking.

The idea instantly conjures up images of Tom Cruise in Minority Report, controller virtual images with natural and intuitive hand gestures. The technology, which uses the preexisting external cameras on the Quest headset, is currently experimental for consumers and developers, Facebook CEO Mark Zuckerberg said on stage, but it could be just what VR needs to finally escape its niche status.

As Oculus explained on its official blog, “True hand-based input for VR will unlock new mechanics for VR developers and creators alike. Hand tracking on Quest will let people be more expressive in VR and connect on a deeper level in social experiences. Not only will the current community of VR enthusiasts and early adopters benefit from more natural forms of interaction, hand tracking on Quest will also reduce the barriers of entry to VR for people who may not be familiar or comfortable with gaming controllers.

“Even better, your hands are always with you and always on—you don’t have to grab a controller, keep it charged, or pair it with the headset to jump into VR. From entertainment use cases to education and enterprise, the possibilities are massive.”

Considering that the Quest is less powerful than the Oculus Rift and does all its processing without the help of a PC, the fact that it can track hands with any kind of precision is certainly an engineering feat. (Although it’s worth noting that Quest will be able to act as a Rift with the Link feature starting in November.)

Facebook’s research team explained in a separate post that Quest will be leveraging artificial intelligence and deep learning to essentially predict a person’s hand movements. It’s like skating to where the puck will be.

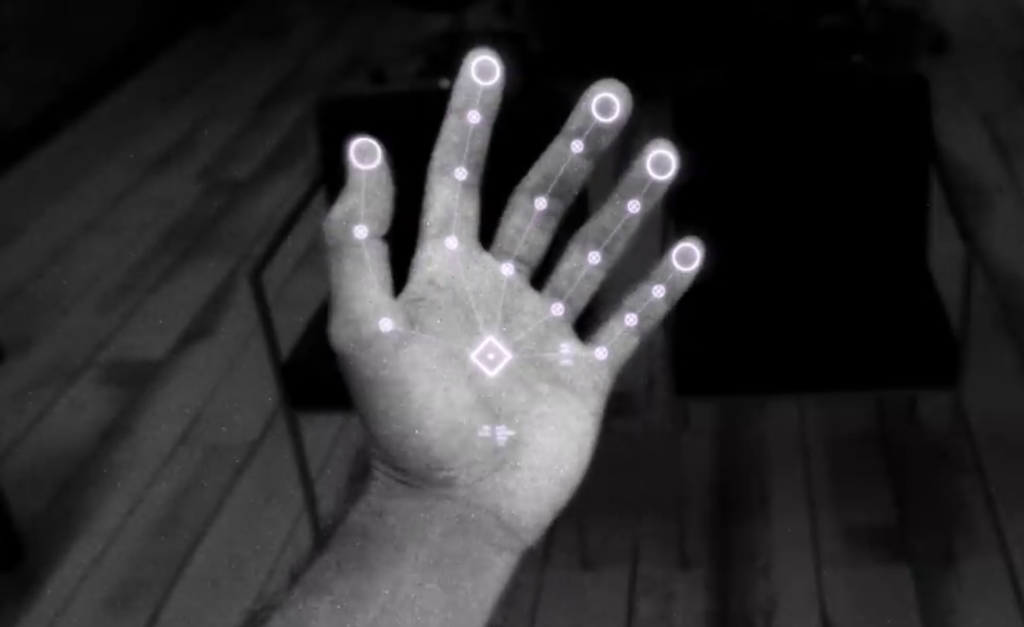

“Deep neural networks are used to predict the location of a person’s hands as well as landmarks, such as joints of the hands. These landmarks are then used to reconstruct a 26 degree-of-freedom pose of the person’s hands and fingers. The result is a 3D model that includes the configuration and surface geometry of the hand. APIs will enable developers to use these 3D models to enable new interaction mechanics in their apps or to drive a user interface,” the engineers wrote.

“We use a novel tracking architecture that produces accurate, low-jitter estimates of hand pose robustly across a wide range of environments, and an efficient, quantized neural network framework that enables real-time hand-tracking on a mobile processor, without compromising resources dedicated to user applications.”

That last point is key, if it holds up in real-world usage. Hand tracking will undoubtedly open up a range of new possibilities, but if the Quest is chugging along while using the feature or if battery life significantly suffers, it may not be worth that trade-off for many users.

It’s also something that many developers may have a challenge adapting to, at least initially. VR itself presented game creators with a brand-new paradigm, and learning that “language of VR” is something that many, even now more than three years past Rift’s launch, have yet to master. Just as a number of studios have become accustomed to certain mechanics tied to the Oculus Touch or HTC Vive controllers, switching gears to full-on human hand tracking could be tough.

“I think it’s cool, and I’m excited for the tech and what people will build for it. Games that already use Oculus Touch or [Valve’s] Index controllers might struggle though, as they’ve been designed with those in mind,” Callum Underwood, executive producer on Superhot VR, told GameDaily. “When you point a gun in Superhot VR, in the real world your real hand is holding onto a real controller, and the presence that gives. Without a controller – you’re either finger-gunning or you’re gripping an imaginary handle.

“That said – I am very excited about it, and we’ll do what we can to get Superhot VR working with it. Very cool update from Oculus.”

Alex Schwartz and Cy Wise, the Owlchemy Labs co-founders who today just revealed their new XR studio Absurd:joy, agree that hand tracking will be a challenge.

“Hands free tracking is definitely exciting and also super difficult to get right! The major detail that I keep harping on is that raw hand input must meet a very high bar in order to be satisfying and useful for natural interactions,” Schwartz commented to GameDaily. “My philosophy has always been that computers should properly sense how humans naturally and intuitively interact with the world rather than technology requiring us to adapt or shape our hands in certain ways.”

Wise added, “If the sensors can accurately sense our hands and let us naturally reach out and grab without thinking about occlusion or cameras or tracking and it ‘just works’, then this will open the doors to all kinds of new interactions. Easier said than done, for sure!”

The hand tracking that Quest will incorporate next year seems to be right up Absurd:joy’s alley, as the new studio stresses that users shouldn’t be forced into “a new way to speak, gesture, or act because it’s easier to develop.”

It’s clear that both Schwartz and Wise have been thinking long and hard about hand presence in VR for some time. Their shared design philosophy seems to have evolved somewhat from 2018 when Schwartz told us during the DICE Summit that most interactions would require a controller because “you’re going to need true haptics represented somehow.”

“How are we going to stop our arms from moving forward?” he asked at the time. “That’s one of the hardest interaction problems of all time, some kind of thing that pushes back against your muscles or whatever, and we’re way far out from that. It looks good on TV, Minority Report or whatever, but it doesn’t feel right because your brain doesn’t accept that you’re doing the thing.”

Oculus plans to launch its hand tracking feature in early 2020, although an exact date was not specified. The Quest community will be given an opportunity to opt-in for testing out the functionality. The feedback that Oculus and developers get from this early phase will be critical for fine-tuning the hand tracking experiences.

VR is still very much in its infancy and is advancing at a rapid pace. While many have pointed to foveated rendering as one of the biggest game-changing features on the horizon, precise and accurate hand tracking that, as Wise says, “just works” could very well be a landmark moment for the VR/XR industry. Developers, are you ready to… ahem… have your hands full?

[Update 9/26/2019] GameDaily has received a bit more game developer reaction to the news that hand tracking is coming to Oculus Quest. Chris Hanney from I-Illusions (Space Pirate Trainer) commented that while he sees the benefit of hands, he doesn’t feel it can ever fully replace controllers.

“Hand tracking certainly will make a great replacement for a lot of UI interaction, such as interacting with menus and scrollable content. The fact that it’ll be a software update for all Quests is already a huge thing, [and] I wouldn’t be surprised if Oculus launches a lower cost unit in the coming six months to replace the Go (like the Quest, but with less cameras, and no controllers) to replace the current Go but keep the price point around the same,” he remarked. “Having said all that – haptics is hugely important for immersive experiences, so I can’t see it ever replacing all interaction. Leap Motion was a great predecessor in that regard. Great for the occasional interaction but not a replacement to a tracked controller, in my opinion.”

GameDaily.biz © 2026 | All Rights Reserved.

GameDaily.biz © 2026 | All Rights Reserved.